For some time now I've been

experimenting around with a photographic technique known as HDR -- "High Dynamic Range" -- imaging. Essentially, the principle behind HDR is that a digital, and to some extents even a film camera can only capture a very narrow range of dynamic lighting information, especially when compared to the human eye and brain. For example, when you are standing outside in broad daylight and you look at your friend, who is standing in the shadow of a tree, you can see him or her just fine. You can also see the parts of the ground that aren't in shadow just fine, and likely the sky just fine too, even if you're looking right into the sunlight. However, with a camera, it's different. With a camera, you must conciously decide whether or not you want the person in the shadow to be rendered correctly, or the ground that is out of the shadow to be rendered correctly, or the sky to be rendered correctly. If you, for example, choose the sky, then when you get the photographic prints back, the ground will be dark and your friend will be entirely covered in the blackest of shadows. If you choose your friend, then the sky will be white, not blue, and the ground that isn't in shadow will be extremely bright and washed out. This is a limitation of the technology; with film, it's a limitation of chemistry, with digital cameras, it's a limitation of the engineered design of the chip that captures light information. The human eye and brain outperforms all cameras in this aspect by a very significant margin.

However, HDR attempts to try to compensate for this. Essentially the photographer takes several photographs of the same scene and blends them together using a computer algorithm. Now, for example, the photographer would take picture in which your friend is rendered correctly, then take a picture in which the sky is rendered correctly, then finally take a photo in which the ground is rendered correctly. He would feed these digital photos into a computer algorithm, and after a lot of manual tweaking of the image, the computer will spit out an image in which

all three -- ground, sky, and friend -- are correctly rendered. It's not magic, but it's damned close.

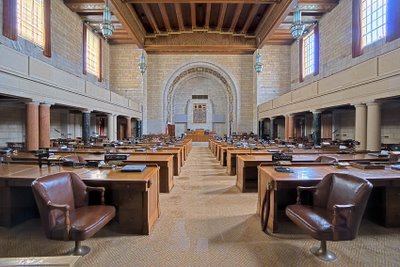

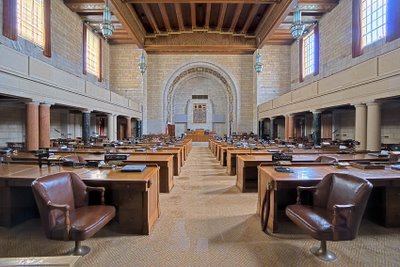

My last batch of HDR images were of the Nebraska Capitol building exterior at night. Today, I decided to walk over to the capitol and try this technique on the

interior. I got some pretty cool (in my opinion!) results. Click the photos below to make them larger.

No, I haven't given up on normal photography. ;) Here's a couple from today that aren't HDR:

3 comments:

Hey Ryan,

Those interior shots of the capital really show off the advantages of HDR compositions. How many different exposures were those? I'd love to see some regular interior shots as comparison.

Photoshop CS2 should arrive in the mail any day now; I can't wait to experiment with this on my own.

-Rockwell

how can I get HDR images from NO STILL situations?

I use the auto exposure bracketing function on my Canon camera for NO STILL situations. That works well.

Post a Comment